Advancing UAV-Based Spatio-Temporal Image Registration For Crop Breeding Programs

This project is designed to address one of the most critical challenges in agricultural research: the accurate alignment and analysis of large volumes of aerial imagery collected over time from Unmanned Aerial Vehicles (UAVs). Crop breeding programs depend heavily on precise phenotypic measurements across different stages of plant growth, yet variations in flight paths, illumination conditions, and field heterogeneity often make consistent temporal comparisons extremely difficult. This project focuses on developing a robust image registration framework that leverages the inherent geometric structure of agricultural fields, such as planting rows, block designs, and experimental plot boundaries, to anchor UAV imagery across multiple time points. By aligning data not just spatially but also temporally, the framework allows researchers to track subtle growth patterns, phenotypic variations, and treatment effects with high fidelity, ultimately enabling more informed selection decisions and advancing modern breeding pipelines.

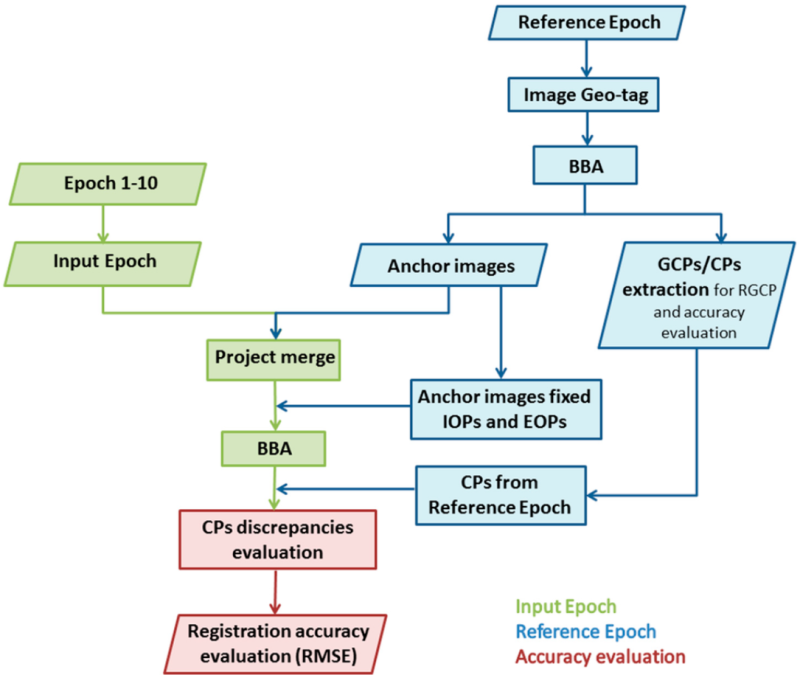

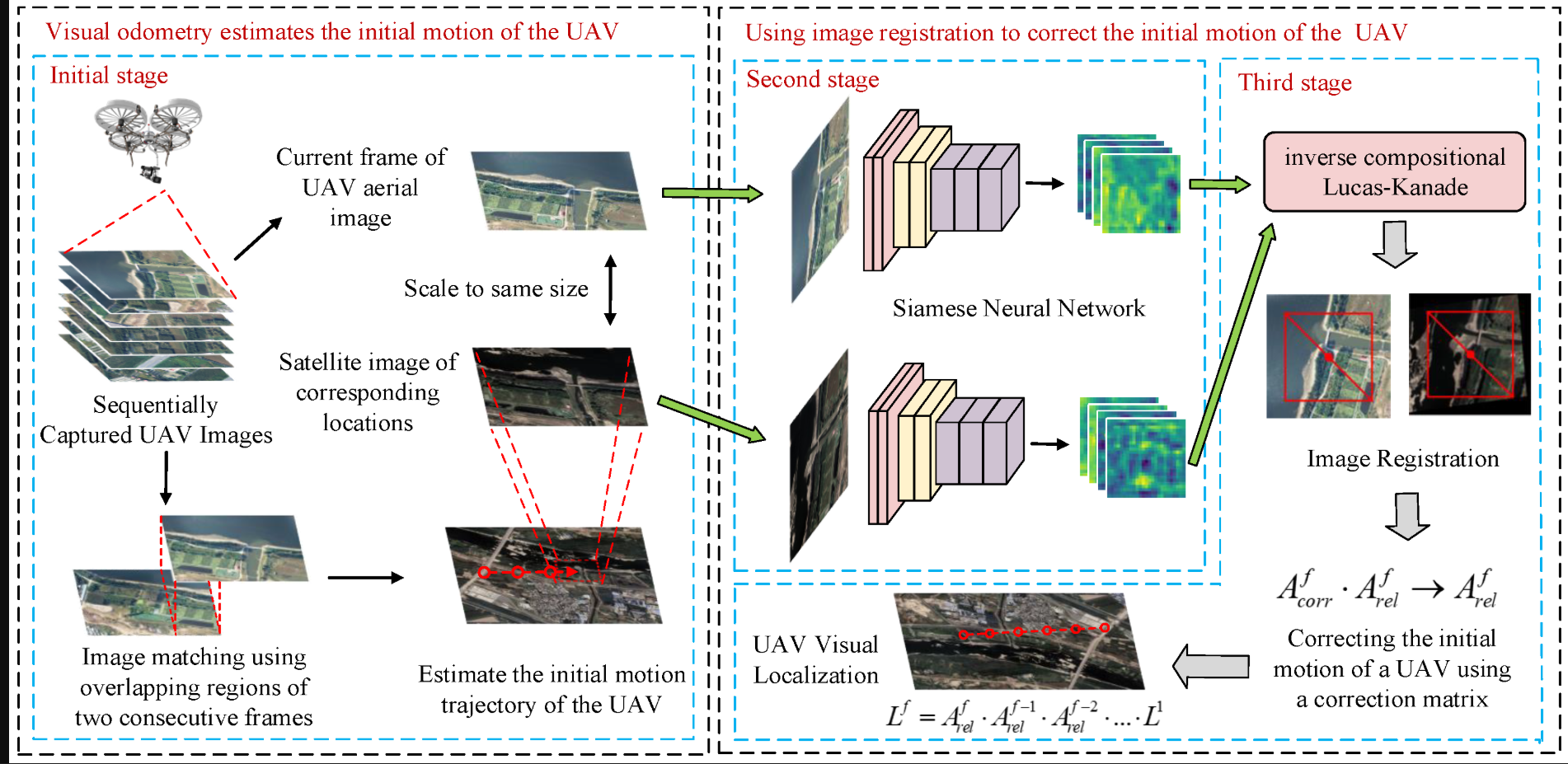

We integrate spatio-temporal modeling with computer vision techniques to ensure that each pixel and feature across sequential UAV flights can be accurately mapped to its corresponding field location and crop instance. Unlike traditional image registration methods that often struggle in open agricultural landscapes due to the repetitive textures of crops, this system introduces field-specific geometric features—such as row orientations, intersection points, and experimental layout markers, as stable anchors for registration. These structural cues enable the system to correct for positional drift, scale differences, and perspective distortions while maintaining consistency across varying growth stages where plant size, canopy density, and spectral signatures change significantly. Additionally, by combining temporal consistency checks with geometric anchoring, the system enhances robustness against environmental noise, shadow variations, and UAV positional inaccuracies. This allows the generation of time-series datasets that are spatially coherent and phenotypically reliable, paving the way for high-throughput phenotyping at an unprecedented scale.

The broader impact of this project lies in its potential to revolutionize crop breeding workflows by making UAV-based phenotyping not only faster but also more accurate and scalable. With properly registered spatio-temporal imagery, breeders can move beyond manual scoring methods and harness computational tools to quantify traits such as canopy cover, growth rate, flowering dynamics, and stress responses with unparalleled precision. Moreover, the framework opens doors to integrating multimodal UAV data, including RGB, multispectral, thermal, and hyperspectral imagery, into a unified temporal space, enabling holistic insights into plant health and productivity. By reducing data misalignment errors and ensuring temporal continuity, the system provides breeders with a reliable digital twin of field experiments, facilitating better decision-making and accelerating genetic gains. In the long run, this advancement will not only strengthen crop breeding programs but also contribute to global food security by supporting the development of climate-resilient, high-yielding crop varieties that can thrive under diverse and challenging agricultural conditions.

Faculty

-

Dr. Muhammad Moazam FrazDr. Muhammad Moazam Fraz

-

Dr. Zuhair ZafarDr. Zuhair Zafar

Students

-

Muhammad Salman Akhtar