Detection & Segmentation for Autonomous Vehicles

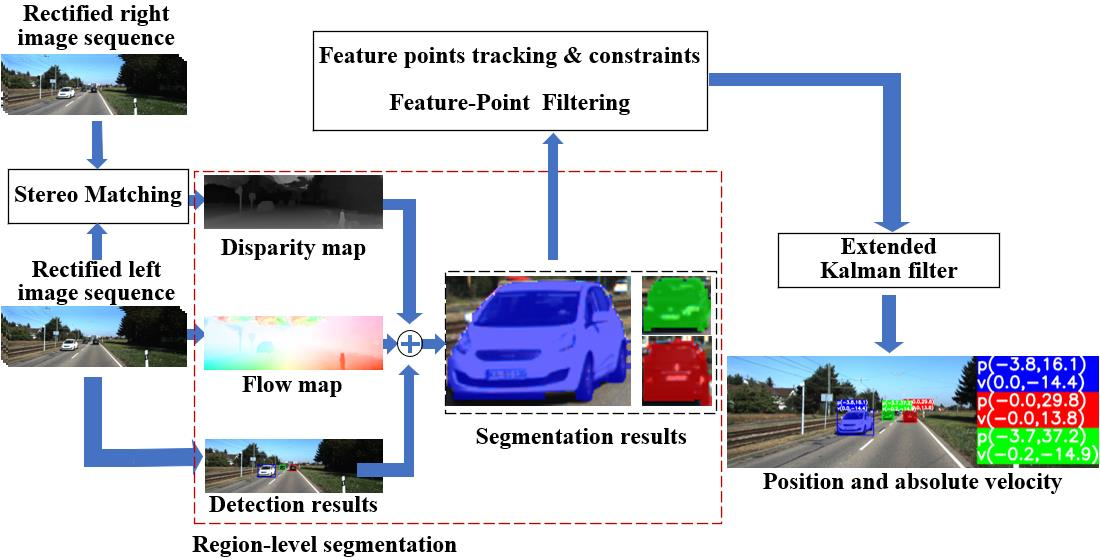

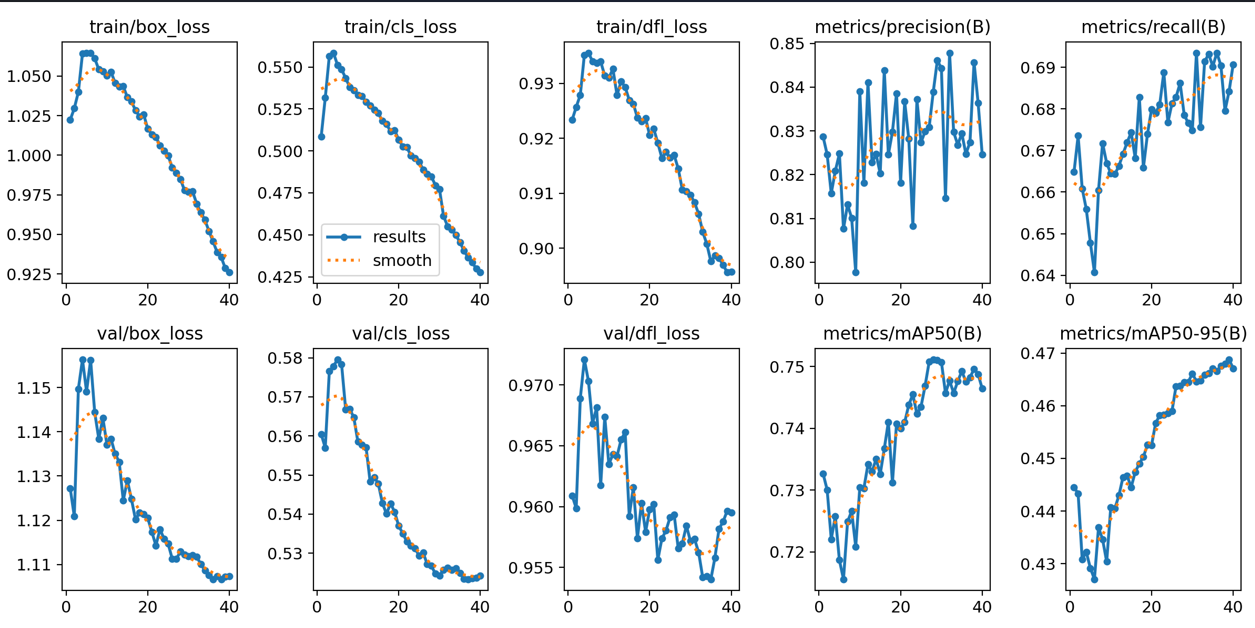

In this project, we develop a forward-looking framework designed to bring advanced perception capabilities to self-driving car systems. State-of-the-art object detection models like YOLOv8 are fast, powerful, and architecturally streamlined to run in real-time, which makes them ideal for embedded automotive applications. We first explore the dataset, configure directories, and execute training, all within a single coherent workflow. The dataset contains paired driving images and label files in CSV format, giving a clean and practical base for training a vehicle detection system. As training proceeds, the system outputs model weights, performance logs, and metrics into designated directories, creating full visibility from data input to trained model outputs, and delivering an end-to-end experience suitable for experimentation or benchmark comparisons.

The second dimension that makes this project stand out is its emphasis on reproducibility and hands-on usability. This project not only streamlines the train-and-evaluate cycle but also provides real-time visualizations of model performance. Embedded images, placed directly in the notebook, illustrate detection results and performance trends, making it easier for users to interpret how the model learns, where it misfires, and where it shines. This feedback loop is essential when fine-tuning object detection models for complex real-world settings, where scenes may contain vehicles of various shapes, sizes, and occlusion conditions. Since YOLOv8 is optimized for deployment, the resulting model files are compact and inference-ready, positioning this workflow as a launching pad for developers who intend to deploy detection models on edge hardware in actual vehicles, be it in research prototypes, campus shuttles, or scaled-up autonomous vehicles.

The open nature and clean licensing of this project amplify its long-term value. Whether you want to integrate semantic segmentation modules, experiment with additional sensor inputs like lidar or thermal cameras, or customize post-processing logic for lane-level density mapping or near-miss detection, this codebase forms a robust starting point. Its modular structure means that expanding functionality (multi-class segmentation, real-time tracking, seamless integration into ROS pipelines) can be achieved with incremental enhancements. In deploying this pipeline in simulated or real driving environments, stakeholders can test detection thresholds, latency benchmarks, and edge-device performance, bridging the gap between the experimental and the deployable. This project not only demonstrates how to train a YOLOv8-based perception system but also lays down a foundational stepping stone for building intelligent, road-ready AI systems for autonomous vehicles.

Faculty

-

Dr. Muhammad Moazam FrazDr. Muhammad Moazam Fraz

Students

-

Sara Adnan Ghori

-

Usama Athar