Gesture-Based Volume Control from Video Feed

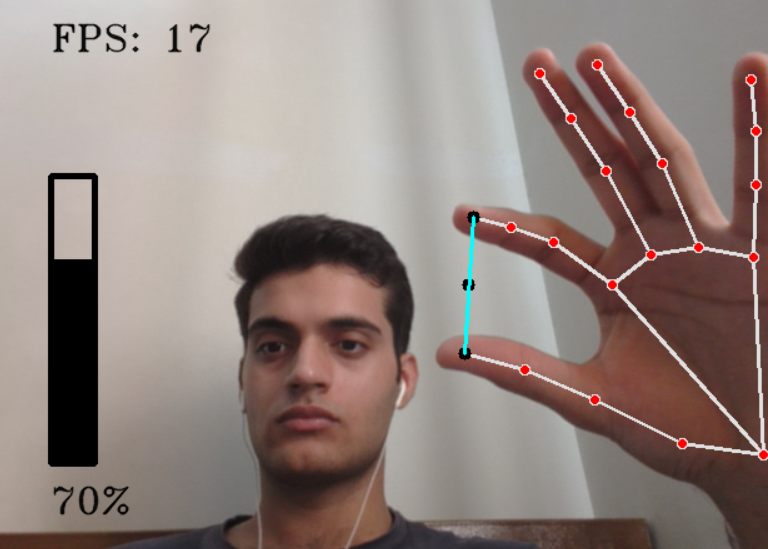

Imagine effortlessly adjusting your device’s volume with just a pinch, with no mouse, no buttons, no touching necessary. Through this project, everyday hand gestures become intuitive controls, enabling people to increase or decrease volume simply by moving their thumb and index finger in front of a camera. Think of it as the future of interactive interfaces: your hand becomes the dial, the pinch determines volume, and a live video feed translates your motions into real-time auditory response. It takes gesture recognition out of the science fiction realm and pins it firmly to modern human-computer interaction, making control more natural, seamless, and even fun.

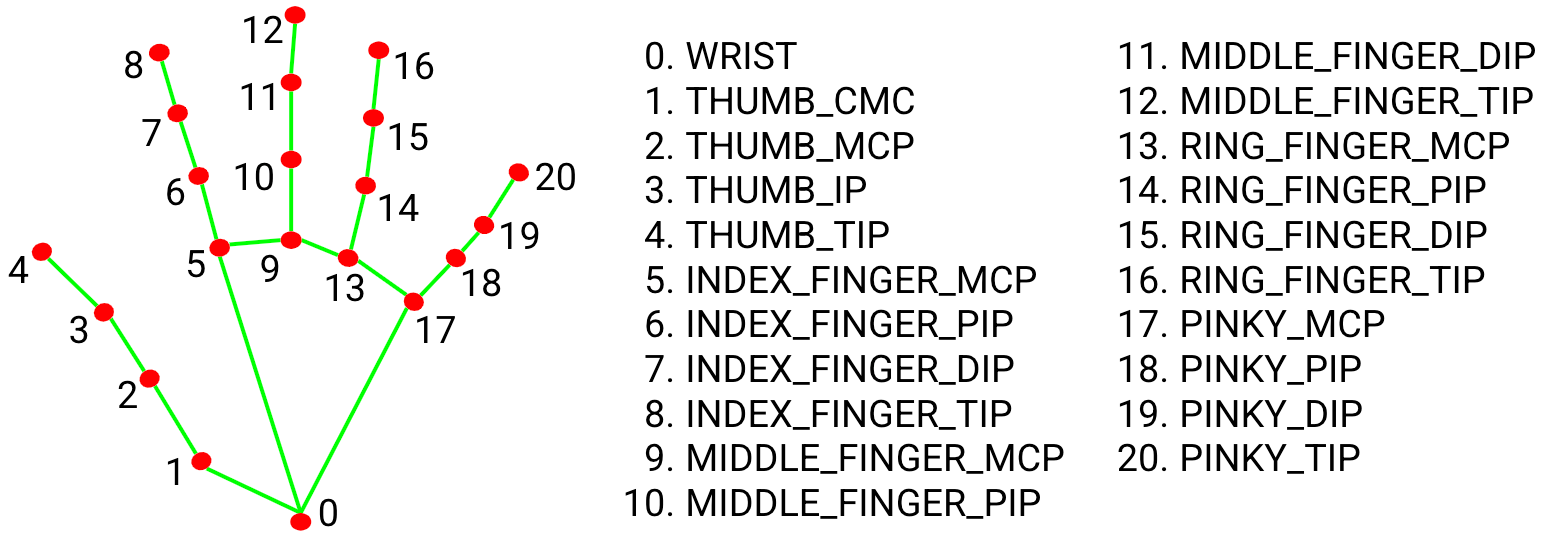

The system runs on a clever combination of lightweight computer vision and core system integration. A video feed, or even a simple webcam, captures hand movements, while an on-device hand landmark detector tracks your fingertips and joints at high speed. As your thumb and index finger move closer or farther apart, the system continuously measures that distance and maps it precisely onto a volume scale. Behind the scenes, the distance between those two fingertips is normalized to correspond with minimum and maximum volume levels. The software translates that float value into a system-level volume change, so each gesture changes your audio output instantly and with smooth transitions. It is a real-time feedback loop: see the gesture, interpret it, and hear the change.

This project is more than technology. It brings together elegant interaction design and meaningful accessibility. For environments where touch isn’t convenient, like sweating in a kitchen, driving hands-free, or simply being hands-full, this gesture interface steps in with effortless control. It also opens the door to inclusive design: users with temporary mobility restrictions or certain disabilities may find this a life-changing interface. From a technical lens, the system showcases how vision models can meaningfully enhance daily tools. It’s easy to customize: change mapping curves, adjust gesture ranges, or overlay visual feedback. With a modular design, it’s not hard to imagine extending this gesture-control principle to other system functions, like brightness, media playback, or even smart home devices. This is a compelling showcase of how natural gestures, real-time perception, and simple UI integration can reimagine how we interact with digital worlds.

Faculty

-

Dr. Muhammad Moazam FrazDr. Muhammad Moazam Fraz

Students

-

Abdullah Usama

-

Usama Athar