An Expert-Guided Multimodal AI Ecosystem for Diagnostic Intelligence

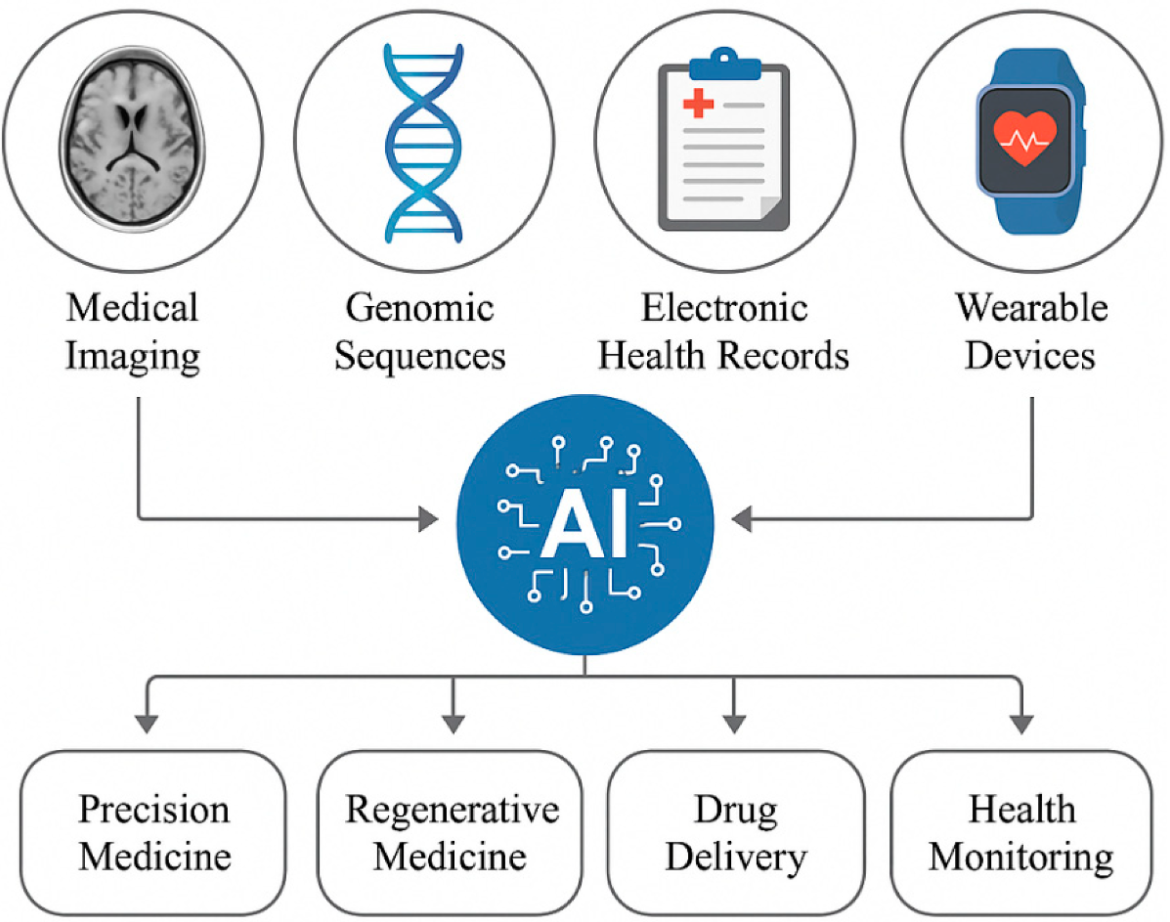

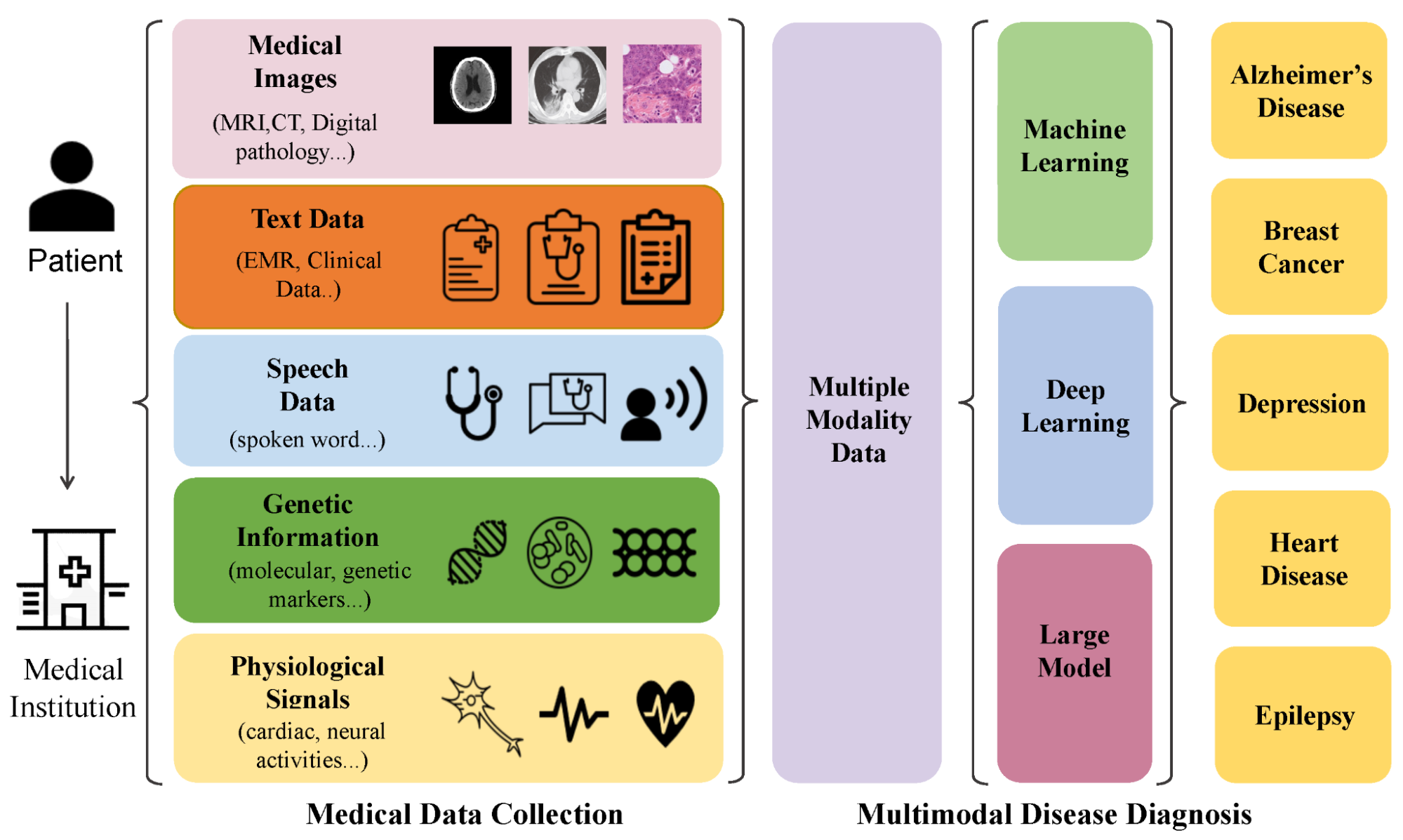

An Expert-Guided Multimodal AI Ecosystem for Diagnostic Intelligence is designed to tackle one of the most persistent challenges in modern radiology: the lack of fully integrated, adaptable, and interpretable AI systems for medical imaging. While deep learning models for tumor segmentation and natural language processing tools for report generation exist, they are often static, siloed, and difficult to translate into clinical workflows. This project aims to unify these pieces into a seamless pipeline that not only segments multi-modal MRI scans but also generates expert-level diagnostic reports, guided by continual learning and explainability. By creating an end-to-end ecosystem, the work bridges the gap between research prototypes and tools that radiologists can actually use in practice.

At the technical core of the project is the Mixture of Modality Experts (MoME+) segmentation model, enhanced with continual learning strategies such as Elastic Weight Consolidation and Replay Memory. This allows the system to adapt as new datasets or imaging modalities are introduced, avoiding the common pitfall of catastrophic forgetting. Segmentation outputs are then passed into a domain-adapted large language model (LLM) trained on synthetic and expert-reviewed clinical data. The LLM interprets tumor masks, extracts structured findings, and generates human-readable diagnostic reports. To ensure transparency, explainability methods like Grad-CAM overlays are integrated, allowing clinicians to see exactly where and how the model focused during segmentation.

The ecosystem is delivered through a modular, web-based platform built with a modern backend (Django, Flask, PostgreSQL) and a responsive frontend (React, Tailwind). Clinicians can upload MRI scans, visualize segmentation outputs, and instantly receive a draft diagnostic report, all within a secure and user-friendly interface. Deployed on cloud infrastructure and optimized for real-time inference, the system is designed to scale for use in hospitals, telemedicine services, and research institutions. The project contributes scientifically by advancing continual learning in medical imaging and by demonstrating how multimodal AI can be packaged into an interpretable, end-to-end diagnostic tool. In the long run, it offers a pathway toward more accessible, efficient, and intelligent radiology support systems that can reduce workload pressures while improving diagnostic consistency.

Faculty

-

Dr. Muhammad Moazam FrazDr. Muhammad Moazam Fraz

-

Dr. Muhammad Naseer BajwaDr. Muhammad Naseer Bajwa

Students

-

Ahmed Sultan

-

Farhan Kashif